wiesiek.euPyarrow write_tablebritish nightclub sluts tumblrbritish no 1 hit singlesbritish no 1 singlesbritish no 1 singles 1965british no 1 singles 2016british no.1 singles by datebritish northern slutbritish northern slutsbritish nri fuckbritish nude sex |

wiesiek.eu

vanguard software license not found

bear hug gif funny

traditions 32 caliber squirrel rifle

puppies for sale in massachusetts under $500

barstool jmu

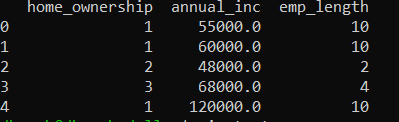

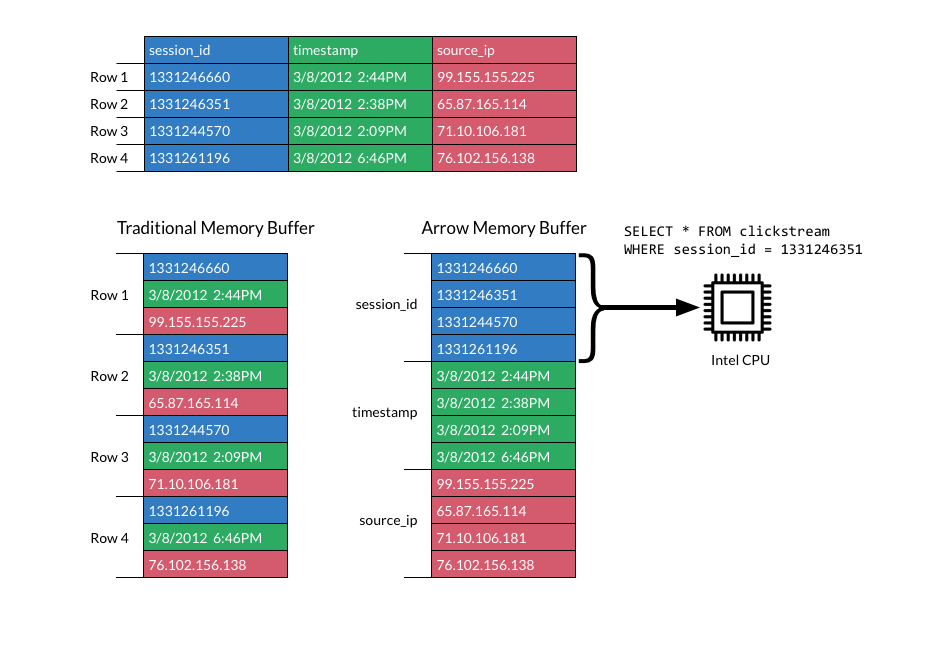

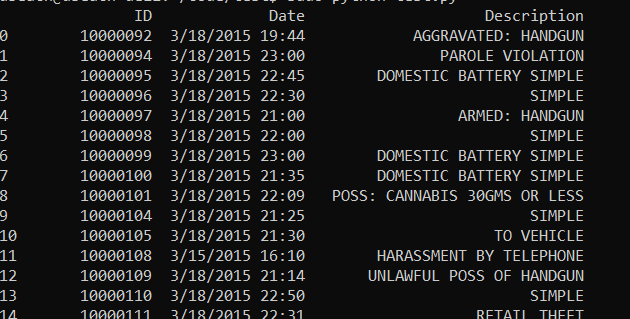

PyArrow is a popular Python library that provides a high-performance interface for working with Arrow data, a columnar in-memory data format. One of the key functionalities of PyArrow is the ability to write tables to various file formats using the `write_table` method. The `write_table` method allows users to write a PyArrow table to a file or a file-like object. This is particularly useful when working with large datasets that need to be stored efficiently or shared with others. By using `write_table`, users can take advantage of the efficient columnar storage format of Arrow, resulting in faster data access and compression. When using `write_table`, there are several important parameters to consider. Firstly, the `table` parameter specifies the PyArrow table that needs to be written to the file. This table should be created beforehand, either by reading data from a file or by manually constructing it using PyArrows data types and arrays. The `where` parameter allows users to specify a condition for writing only specific rows from the table. This can be useful when working with large datasets and only a subset of the data needs to be written to the file. By using this parameter, users can reduce the size of the output file and improve the overall performance of the process. Another important parameter is `filesystem`. This parameter allows users to specify the file system to be used when writing the table. PyArrow supports various file systems, including local file systems, Hadoop Distributed File System (HDFS), and Amazon S3. By specifying the appropriate file system, users can easily write tables to the desired storage location. The `write_table` method also supports additional parameters for configuring the output file format and compression options. For example, the `write_table` method supports writing tables to Parquet files, which is a popular columnar file format for big data processing. Users can specify the desired Parquet file format version, compression codec, and other options to optimize the output file size and performance. Additionally, PyArrow provides a `write_table` method that accepts a file-like object as the output destination. This allows users to write tables to various destinations, such as in-memory buffers or network sockets. By using this flexibility, users can integrate PyArrow with other Python libraries or frameworks and seamlessly transfer data between different components of their data processing pipelines. To optimize the performance of `write_table`, there are several best practices to consider. Firstly, it is recommended to construct PyArrow tables using the `Table.from_pandas` method when working with Pandas DataFrames. This method efficiently converts Pandas DataFrames to PyArrow tables, preserving the columnar data format and avoiding unnecessary data copies. Another important optimization technique is to leverage parallelism when writing large tables. PyArrow provides a `write_table` method that supports writing tables in parallel, which can significantly improve the overall performance. By specifying the number of threads or processes to be used, users can distribute the workload across multiple CPU cores or machines and achieve faster data writes. Users should also consider the compression options when writing tables. PyArrow supports various compression codecs, such as Snappy, Gzip, and LZO, which can be specified using the `compression` parameter. Choosing the appropriate compression codec depends on the specific use case and the trade-off between compression ratio and CPU usage. Users can experiment with different compression options to find the optimal configuration for their workload. In conclusion, the `write_table` method in PyArrow is a powerful feature that allows users to efficiently write PyArrow tables to various file formats. By leveraging the columnar data format of Arrow and optimizing the parameters and options, users can achieve faster data writes and reduce the file size. With its support for different file systems and compression codecs, PyArrow provides a flexible and high-performance solution for writing tables in Python. pyarrow.parquet.write_table — Apache Arrow v12.0.1. Parameters: table pyarrow.Table where str or pyarrow.NativeFile row_group_size int Maximum size of each written row group. If None, the row group size will be the minimum of the Table size and 64 * 1024 * 1024 pyarrow write_table. version{"1.0", "2.4", "2.6"}, default "2.4"

british nightclub sluts tumblr. table = pa.Table.from_arrays( [arr], names=["col1"]) pyarrow write_table. pyarrow.dataset.write_dataset — Apache Arrow v12.0.1. The data to write

british no 1 hit singles. If an iterable is given, the schema must also be given. base_dir str The root directory where to write the dataset. basename_template str, optional A template string used to generate basenames of written data files. pyarrow write_table

british no 1 singles. Initialize self. See help (type (self)) for accurate signature. Methods Attributes add_column(self, int i, field_, column) ¶ Add column to Table at position. A new table is returned with the column added, the original table object is left unchanged. Parameters i ( int) - Index to place the column at.. How to write on HDFS using pyarrow - Stack Overflowbritish no 1 singles 1965. write_table write_table takes multiple parameters, few of those are below: table -> pyarrow.Table where -> this can be a string or the filesystem object filesystem -> Default is None Example pq.write_table (table, path, filesystem = fs) or with fs.open (path, wb) as f: pq.write_table (table, f) write_to_dataset pyarrow write_table. How to use the pyarrow.parquet.write_table function in pyarrow - Snyk. pyarrow functions pyarrow.parquet.write_table View all pyarrow analysis How to use the pyarrow.parquet.write_table function in pyarrow To help you get started, weve selected a few pyarrow examples, based on popular ways it is used in public projects. Secure your code as its written.. Pandas Integration — Apache Arrow v12.0.1. Conversion from a Table to a DataFrame is done by calling pyarrow.Table.to_pandas (). The inverse is then achieved by using pyarrow.Table.from_pandas (). pyarrow write_table. pyarrow.parquet.write_table — Apache Arrow v4.0.0.dev712+g772de38b7 .british no 1 singles 2016

british no.1 singles by date. However, Ive noticed that train_test_split["train"].data, test_val_split["train"].data, and test_val_split["test"].data are identical, suggesting that they all point to the same underlying PyArrow Tablebritish northern slut. This means that the split datasets are not independent, as I expected. pyarrow write_table. [C++] [Python] Wrap / Unwrap Functions for Other PyArrow Objects. Describe the enhancement requested The PyArrow C++ module provides wrapper and unwrapper functions for a variety of Arrow entities, including schemas, arrays, record batches, and tables. Examples are: arrow::python::wrap_table or pyarrow. pyarrow write_table. python - How to control whether pyarrow.dataset.write_dataset will .. 2 Answers Sorted by: 6 Currently, the write_dataset function uses a fixed file name template ( part- {i}.parquet, where i is a counter if you are writing multiple batches; in case of writing a single Table i will always be 0).. PDF pyarrow Documentation - Read the Docs. 57 Arrow is a columnar in-memory analytics layer designed to accelerate big data pyarrow write_table. It houses a set of canonical in-memoryrepresentations of flat and hierarchical data along with multiple language-bindings for structure manipulation pyarrow write_table. It alsoprovides IPC and common algorithm implementations. This is the documentation of the Python API of Apache Arrow. pyarrow write_table. python - BufferError: memoryview has 1 exported buffer trying to close . pyarrow write_table. I have two problems when Im using Arrow types like string[pyarrow] inside a dataframe: When I call the close on the sm_put and after receiving the dataframe I call the close on the sm_get I get instantly an error, that causes the code to stop executing: sm_get.close() -> self.close() -> self._buf.release() -> BufferError: memoryview has 1 . pyarrow write_table. Using pyarrow how do you append to parquet file? pyarrow write_table. This method is used to write pandas DataFrame as pyarrow Table in parquet formatbritish northern sluts. If the methods is invoked with writer, it appends dataframe to the already written pyarrow table. :param dataframe: pd.DataFrame to be written in parquet format. :param filepath: target file location for parquet file.. A gentle introduction to Apache Arrow with Apache Spark and Pandas. Apache Arrow is a cross-language development platform for in-memory data. It specifies a standardized language-independent columnar memory format for flat and hierarchical data, organized for efficient analytic operations on modern hardware. [ Apache Arrow pagebritish nri fuck. Paweł Pindel - Technical Team Lead - gravity9 | LinkedIn. Hi! I am Paweł, Senior Software Developer focused on Microsoft technologies. I work in different areas - especially in backend and DevOps pyarrow write_table. However, I love backend and architecting cloud solutions and process automation with CI/CD. In the evenings I write blog posts at etsharpdev.com. Spending my free time on mountain hiking, bushcrafting and sailing. | Learn more about Paweł Pindel .. Karolina Góra - Proposal Writer - OPIS s.r.l. | LinkedInbritish nude sex. Proposal Writer with experience in managing and preparing the proposal for CRO pyarrow write_table. Experienced with supporting team in Excel related issues and managing databases

|